Discover the best RAG for your own data

RAGView evaluates multiple pipelines on your own dataset online—no complex setup—delivering clear RAG evaluation reports and helping you choose the best RAG for your data.

Try it now

40+ widely used RAGs, unified metrics, all in one platform.

Langflow

Build, scale, and deploy RAG and multi-agent AI apps.But we use it to build a naive RAG.

Try it nowR2R

SoTA production-grade RAG system with Agentic RAG architecture and RESTful API support.

Try it nowDocsGPT

Private AI platform supporting Agent building, deep research, document analysis, multi-model support, and API integration.

Try it nowmore diverse,faster,better and cheaper

RAGView's features support multi-path RAGs, rapid evaluation, traceable analysis, and cost-efficient operation.

More Rags

Aggregates 40+ mainstream RAGs, supports simultaneous evaluation of different RAGs, and enables side-by-side comparison of results.

Quick Review

No complex deployment required — just upload your dataset and start online evaluation with one click, enjoying minute-level route selection.

Better Analysis

Each evaluation provides detailed questions and traceable metrics to help users perform accurate attribution analysis.

Cost Saving

Evaluate multiple different RAGs online and cut labor and resource costs by roughly 70% compared to traditional methods.

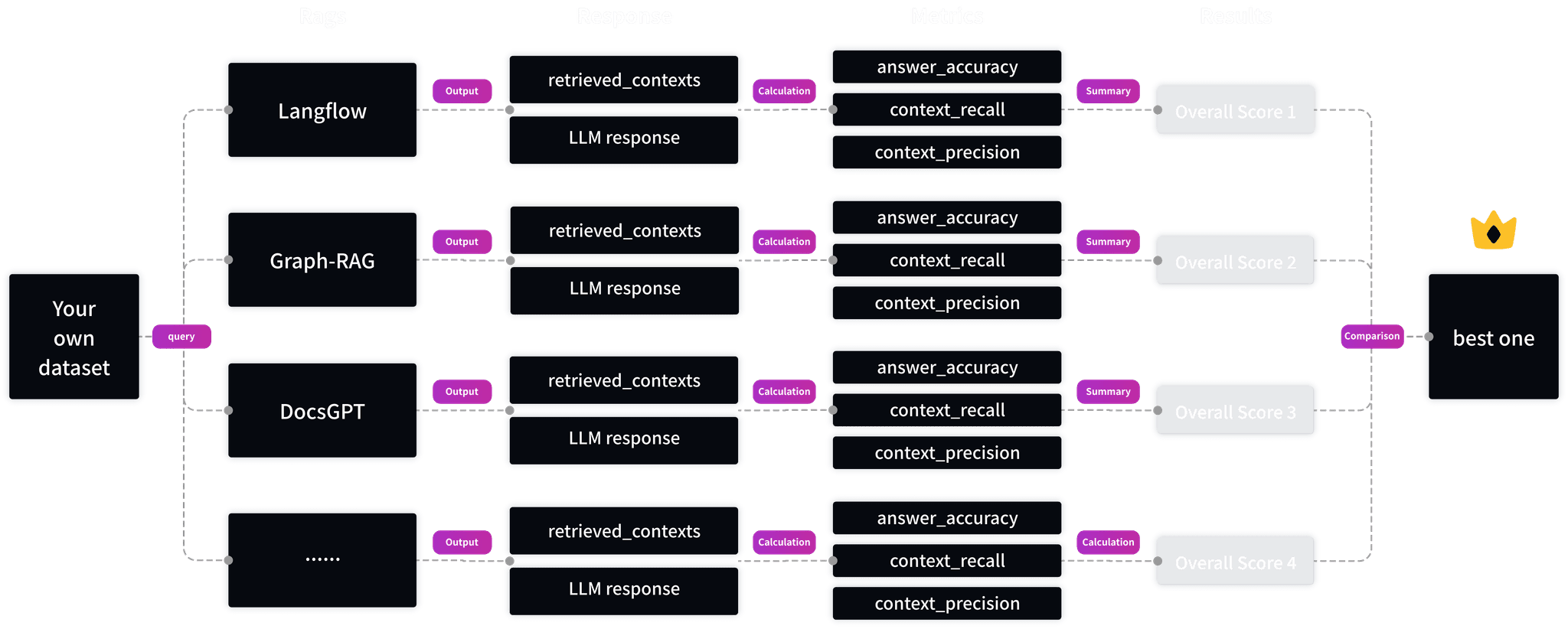

How do we help you choose the optimal RAG solution

Our process saves you a lot of work - in just 15 minutes, you can see how different RAG solutions perform on your business data

Need our help?

If you need our help, you can contact us through the following methods

Email us to let us know your question

Github

Leave questions and suggestions in our GitHub

Start Now. Find Your Best-Fit RAG.

Evaluate and compare different RAGs in minutes, not weeks — effortlessly choose what truly fits your data.

Try it now